Chi Chen , Bisheng Yang , Shuang Song , Mao Tian , Jianping Li , Wenxia Dai and Lina Fang

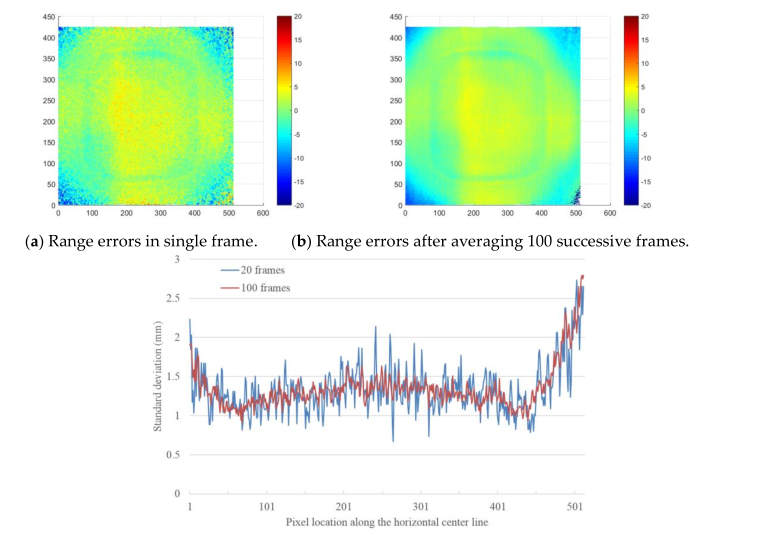

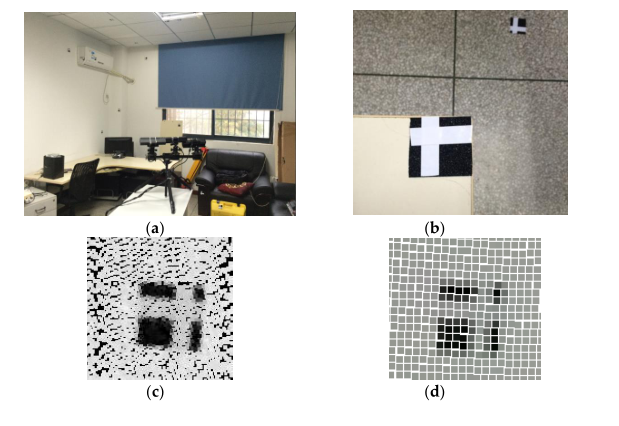

Abstract: Traditional indoor laser scanning trolley/backpacks with multi-laser scanner, panorama cameras, and an inertial measurement unit (IMU) installed are a popular solution to the 3D indoor mapping problem. However, the cost of those mapping suits is quite expensive, and can hardly be replicated by consumer electronic components. The consumer RGB-Depth (RGB-D) camera (e.g.,Kinect V2) is a low-cost option for gathering 3D point clouds. However, because of the narrow field of view (FOV), its collection efficiency and data coverages are lower than that of laser scanners.Additionally, the limited FOV leads to an increase of the scanning workload, data processing burden, and risk of visual odometry (VO)/simultaneous localization and mapping (SLAM) failure.To find an efficient and low-cost way to collect 3D point clouds data with auxiliary information (i.e.,color) for indoor mapping, in this paper we present a prototype indoor mapping solution that is built upon the calibration of multiple RGB-D sensors to construct an array with large FOV. Threetime-of-flight (ToF)-based Kinect V2 RGB-D cameras are mounted on arig with different view directions in order to form a large field of view. The three RGB-D data streams are synchronized and gathered by the OpenKinect driver. The intrinsic calibration that involves the geometry and depth calibration of single RGB-D cameras are solved by homography-based method and ray correction followed by range biases correction based on pixel-wise spline line functions, respectively.The extrinsic calibration is achieved through a coarse-to-fine scheme that solves the initial exterior orientation parameters (EoPs) from sparse control markers and further refines the initial value by an iterative closest point (ICP) variant minimizing the distance between the RGB-D point clouds and the referenced laser point clouds. The effectiveness and accuracy of the proposed prototype and calibration method are evaluated by comparing the point clouds derived from the prototype with ground truth data collected by a terrestrial laser scanner (TLS). The overall analysis of the results shows that the proposed method achieves the seamless integration of multiple point clouds from three Kinect V2 cameras collected at 30 frames per second, resulting in low-cost, efficient, and high-coverage 3D color point cloud collection for indoor mapping applications

Keywords: RGB-D; Kinect; point clouds; registration; calibration; indoor 3D mapping

Download